What was (A)I made for?

Is artificial intelligence bringing about a new world? Or is it all the old human problems cloaked in a new hoodie?

The real A.I. threat? Not some future Matrix turning us all into rechargeable batteries, but today’s A.I. industry demanding all of our data, labor, and energy right now.

The vast tech companies behind generative A.I. (the latest iteration of the tech, responsible for all the hyperrealistic puppy videos and uncanny automated articles) have been busy exploiting workers, building monopolies, finding ways to write off their massive environmental impacts, and disempowering consumers while sucking up every scrap of data they produce.

But generative A.I.’s hunger for data far outstrips that of earlier digital tools, so firms are doing this on a vaster scale than we’ve seen in any previous technology effort. (OpenAI’s Sam Altman is trying to talk world leaders into committing $7 trillion to his project, a sum exceeding GDP growth for the entire world in 2023.) And that’s largely in pursuit of a goal — A.G.I., or “artificial general intelligence” — that is, so far as anyone can tell, more ideological than useful.

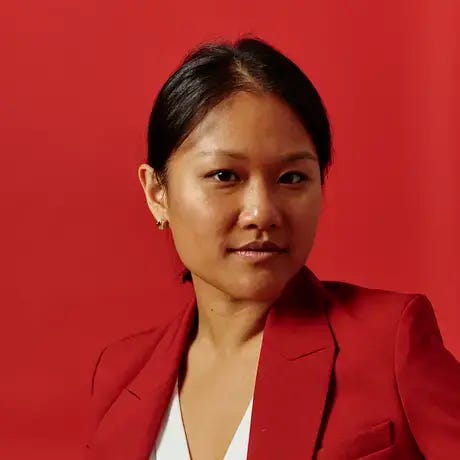

Karen Hao, who’s covered the A.I. industry for MIT Technology Review, The Wall Street Journal, and most recently The Atlantic, is one of the few writers who has focused specifically on the human, environmental, and political costs of emerging A.I. technology. Below, she tells us about the very physical supply chain behind digital technologies, the mix of magical thinking and profit maximization that drives A.I.’s most influential advocates, how A.I. advances might jeopardize climate goals, and about who stands to gain and lose the most from widespread adoption of generative A.I.

A lot has been promised about what A.I. will supposedly do for us, but you’ve been writing mostly about what A.I. might cost us. What are the important hidden costs people are missing in this A.I. transition that we’re going through?

I like to think about the fact that A.I. has a supply chain like any other technology; there are inputs that go into the creation of this technology, data being one, and then computational power or computer chips being another. And both of those have a lot of human costs associated with them.

First of all, when it comes to data, the data comes from people. And that means that if the companies are going to continue expanding their A.I. models and trying to, in their words, “deliver more value” to customers, that fuels a surveillance capitalism business model where they’re continuing to extract data from us. But the cleaning and annotation of that data requires a lot of labor, a lot of low-income labor. Because when you collect data from the real world, it’s very messy, and it needs to be curated and neatly packaged in order for a machine learning model to get the most out of it. And a lot of this work — this is an entire industry now, the data annotation industry — is exported to developing countries, to Global South countries, just like many other industries before it.

Have we just been trained to miss this by our experience with the outsourcing of manufacturing, or by what's happened to us as consumers of online commerce? And is this really just an evolution of what we've been seeing with big tech already?

There’s always been outsourcing of manufacturing. And in the same way, we now see a lot of outsourced work happening in the A.I. supply chain. But the difference is that these are digital products. And I don’t think people have fully wrapped their heads around the fact that there is a very physical and human supply chain to digital products.

A lot of that is because of the way that the tech industry talks about these technologies. They talk about it like, “It comes from the cloud, and it works like magic.” And they don’t really talk about the fact that the magic is actually just people, teaching these machines, very meticulously and under great stress and sometimes trauma, to do the right things. And the A.I. industry is built on surveillance capitalism, as internet platforms in general have been built on this ad-targeting business that’s in turn been built on the extraction of our data.

But the A.I. industry is different in the sense that it has an even stronger imperative to extract that data from us, because the amount of data that goes into building something like ChatGPT completely dwarfs the amount of data that was going into building lucrative ad businesses. We’ve seen these stories showing that OpenAI and other companies are running out of data. And that means that they face an existential business crisis — and if there is no more data they have to generate it from us, in order to continue advancing their technology.

Connecting these issues seems like the way people really need to be framing this stuff, but it’s a frame that most people are still missing. These are all serious anti-democratic threats.